The ABC-XYZ analysis is a very popular tool in supply chain management. It is based on the Pareto principle, i.e. the expectation that the minority of cases has a disproportional impact to the whole. This is often referred to as the 80/20 rule, with the classical example that the 80% of the wealth is owned by 20% of the population (current global statistics suggest that 1% of the global population holds more than 50% of the wealth, but that is beyond the scope of this post!).

ABC analysis

Let us first consider the ABC part of the analysis, which ranks items in terms of importance. This previous sentence is intentionally vague on what is importance and what items should be considered. I will first explain the mechanics of ABC analysis and then get back to these. Suppose for now that we measure importance by average (or total) sales over a given period and that we have 100 SKUs (Stock Keeping Units).

To make the example easier to follow I will explain the ideas behind it, but also provide R code to try it out. First let us get some data. I will use the M3-competition dataset that is available in the package Mcomp:

# Let's create a dataset to work with # Load Mcomp dataset - or install if not present if (!require("Mcomp")){install.packages("Mcomp")} # Create a subset of 100 monthly series with 5 years of data # Each column of array sku is an item and each row a monthly historical sale sku <- array(NA,c(60,100),dimnames=list(NULL,paste0("sku.",1:100))) Y <- subset(M3,"MONTHLY") for (ts in 1:100){ sku[,ts] <- c(Y[[ts]]$x,Y[[ts]]$xx)[1:60] }

Now we calculate the mean volume of sales for each SKU and rank them from maximum to minimum:

# Calculate mean sales per SKU sku.m <- colMeans(sku) # Order them from largest to smallest sku.o <- order(sku.m,decreasing=TRUE) sku.s <- sku.m[sku.o]

Typically in ABC analysis we consider three classes, each containing a percentage of of the items. Common values are: A – 20% top items; B – 30% middle items; and C – 50% bottom items. Given the ranking we have obtained based on mean sales, we can now easily identify which item belongs to which class.

To find the concentration of importance in each class, we can consider the cumulative sales:

# Calculate cumulative mean sales on ordered items sku.c <- cumsum(sku.s) # Find concentration per class abc.c <- sku.c[c(20,50,100)]/sku.c[100] abc.c[2:3] <- abc.c[2:3] - abc.c[1:2] abc.c <- array(abc.c,c(3,1),dimnames=list(c("A","B","C"),"Concentration")) abc.c <- round(100*abc.c,2) print(abc.c)

This gives as a result the following:

| Class | Concentration |

|---|---|

| A | 29.77% |

| B | 34.12% |

| C | 36.10% |

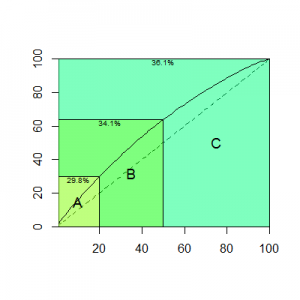

You can use the function abc in TStools to do all these calculations quickly and get a neat visualisation of the result (Fig. 1).

It is easy to see that in this example the concentration for A category items is in fact quite low. The 20% top items correspond to almost 30% of importance in terms of volume of sames. To my experience this is atypical and A category dominates, resulting in curves that saturate much faster.

Let me return to the question of what is importance. In the example above I used mean sales over a period. Although this is very easy to calculate and requires no additional inputs, it is hardly appropriate in most cases. For example consider an item with minimal profit margin that has very high volume of sales and an item with massive profit margin with mediocre volume of sales. Which one is more important? There is no absolute correct answer and it depends on the business context and objectives. Considering the sales value, profit margins or some per-existing indicator of importance that may already be in place, is more appropriate. In short, depending on the criteria we set, we can get any result we want from the ABC analysis, so it is important to choose carefully.

How many classes should we use? Are three enough? Should we use more? What percentages? To answer these questions one has to know why ABC is done for. I have seen companies using 4 classes (ABCD) or even more, however often these are not tied to a clear decision, and therefore I would argue it was of little benefit. Unless your classification is actionable there is limited value you can get out of it. Three classes have the advantage that they separate the assortment in three categories of high, medium, low importance, which is easy to communicate. What about the percentages? Again there is no right or wrong. The 20% cut-off point for the A class originates from the Pareto principle, and the rest follow. Again, if the decision context is known, one might make a more informed decision on the cut-off points, though I would argue that it is the pairs of cut-off and concentration that matter.

The third point that one has to be aware is that ABC analysis is very sensitive to the number of items that goes in the analysis. For example in the previous example if we added another 100 SKUs the previous classification into A, B and C classes would change substantially. The results are always proportional to the number of items included in the analysis. What does this mean for practice? The results of an ABC analysis done for the SKUs in a market segment will not stay the same if we consider the same SKUs in a super-segment that contains more SKUs. A products in a specific market may be C in the overall market. So the scope of the analysis really defines the results. Again, what is the decision that ABC analysis will support?

You may have already spotted that I am somewhat critical of the analysis. Let me summarise the issues. The analysis is very sensitive to the metric of importance, the number of classes and cut-off points, as well as the number of items considered. There is no best solution, as it always depends on the decision context. A final relevant criticism is that ABC analysis provides a snapshot in time and does not show any dynamics. Is an item gaining or losing in importance?

XYZ analysis

The XYZ analysis focuses on how difficult is an item to forecast, with X being the class with easier items and Z the class with the more difficult ones. The perform the XYZ analysis one follows the same logic as for ABC. Therefore the important question is how to define a metric of forecastability. Let me mention here that the academic literature has attempted to put a formula to this quantity. I would argue unsuccessfully. What I will discuss here are far from perfect solutions, but at least have some practical advantages.

Textbooks have supported the use of coefficient of variation. This is so flawed that every time I read it… well, let me explain the issues. The coefficient of variation is a scaled version of the standard deviation of the historical sales. This tells us nothing about the easiness to forecast sales or not. Let me illustrate this with a simple example. Consider an item that has more or less level sales with a lot of variability and an item that has seasonal sales with no randomness whatsoever. The first is difficult to forecast, while the second is as easy as it gets (just copy the previous season as your forecast!). As Fig. 2 illustrates, the coefficient of variation would not indicate this, giving to the seasonal series a higher value.

Fig. 2: Example series that the coefficient of variation fails to indicate which one is more difficult to forecast.

A better measure is forecast errors, which would directly relate to the non-forecastable parts of the series. This introduces a series of different questions: which forecasting method to use? which error metric? should it be in-sample or out-of-sample errors? Again, there is no perfect answer. Ideally we would like to use out-of-sample errors, but that would require us to have a history of forecast errors from an appropriate forecasting method, or conduct a simulation experiment with a holdout.

As for the method, this is perhaps the most complicated question. A single method would not be adequate, the reason being that the same as for coefficient of variation. The latter implies that the forecasting method is the arithmetic mean (the value from which the standard deviation is calculated). An appropriate set of methods should be able to cope with all level, trend and seasonal time series. A simplistic solution is to use naive (random walk) and seasonal naive, with a simplistic selection routine. The difference between seasonal and non-seasonal time series is typically substantial enough to make even weak selection rules work fine. An even better solution is to use a family of models, such as exponential smoothing, and do proper model selection, for instance using AIC or similar information criteria.

The error metric should be robust (do not use percentage errors for this!) and be scale independent. I will not go in the details on this discussion, but instead refer to a recent presentation I gave on the topic. The first few slides from this one should give you an idea of my views.

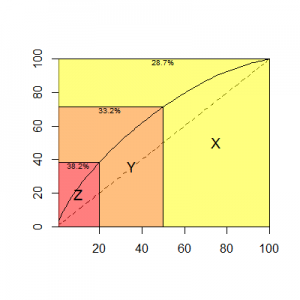

The function xyz in the TStool package for R allows you to do this part of the analysis automatically, but illustrated for ABC, it is easy to do manually. Fig. 3 provides the result for the same dataset. Similarly we can see what percentage of our assortment is responsible for what percentage of our forecast errors, and so on.

One has to note that the same critiques done for the ABC are applicable to the XYZ analysis as well.

Putting everything together – the ABC-XYZ analysis

Once we have characterised our assortment for both ABC and XYZ classes, we can put these two dimensions of analysis together, as Fig. 4 illustrates.

Fig. 4: ABC-XYZ classification. The first 100 monthly series of the M3-competition are characterised in terms of importance and forecastability.

Let us consider what these classes indicate. I will discuss the four corners of the matrix:

- AX: Very important items, but relatively easy to forecast;

- CX: Relatively unimportant items that are relatively easy to forecast;

- AZ: Very important items that are hard to forecast;

- CZ: Relatively unimportant items that are hard to forecast.

In-between classes are likewise easy to interpret. This classification can be quite handy to allocate resources to the forecasting process. Suppose for instance that we have a team of experts adjusting forecasts. It is more meaningful to dedicate time to the lower-left corner of the matrix, rather than the top-right corner in gathering additional information to enrich statistical forecasting. I am not referring to a single cell of the matrix, but to the wider neighbourhood. Alternatively, consider the case that a new forecasting system is implemented. Ideally we would like everything to run smoothly from day 1. Ideally… in practice things go wrong. Again, we would like to be more careful with the lower-left corner of the matrix.

Following the same logic, one would expect that it is easier to improve accuracy on the top part of the matrix, rather than the lower part of the matrix. If we find that we are doing relatively bad in terms of accuracy on AX items, we know that we are messing up on important items, which should be relatively easy to forecast.

I often make the argument for automating the forecasting process using the ABC-XYZ analysis. A large chunk of the assortment (top-right side) can be automated relatively safe, as these are items that are not relatively that crucial and are easier to forecast. Suppose you need to produce forecasts for several thousand items (or even more!), there is no chance you can dedicate equal attention to all forecasts. Similarly, simple alerts should be able to deal with AX products. But AZ products are the difficult to forecast, which we should get right, as they are important. These we should dedicate more resources and potentially difficult to fully-automate (there is adequate evidence in the literature that experts always add value overall).

Concluding remarks

Everything is relative with the ABC-XYZ analysis! I have avoided mentioning even once an error value as a cut-off point to define easy and difficult to forecast. Such logic is flawed, we can reasonably only talk about relative performance and we should not expect same error or importance values to be applicable to different assortments.

I have argued several times that intermittent demand forecasting is a mess. Being consistent in their nature, they also mess up ABC-XYZ analysis. The reason for this is that typically they are low volume and would take over the C class of ABC, pushing other relatively unimportant items to A and B classes (depending how many intermittent items one would permit in the analysis). Furthermore measuring accuracy for intermittent demand forecasting with standard error metrics is wrong, and would typically result in incomparable forecast errors to fast moving items. That would distort the results of XYZ analysis. A good idea is to separate intermittent items from the fast moving items before conducting an ABC-XYZ analysis. Similarly, new products will distort the analysis as well.

ABC-XYZ analysis can be a powerful diagnostic tool, as well as very helpful for allocating resources in the forecasting process. However, if it is not tied to actionable decisions, it is difficult to set it up correctly in terms of what is a good metric for importance or forecastability, how many classes and so on. It certainly is not a magic bullet and suffers from several weaknesses, but which tool does not?

Pingback: anamind

But during the time of using SAP then when we can use ND, VB. PD . with some explanation

Then using the time when using SAP how make decision that we have to use ND,PD,VB on the basic of MRP type.

Thanks for your great articles!

Could you upload the code with which you have generated the figure 2 or any other where this happens? I haven’t been able to reproduce it.

Sure, here is a code snippet to produce similar results:

could you please write the whole code for abcxyz analysis

You can find everything on github here: https://github.com/trnnick/tsutils

Pingback: Empirical safety stock estimation based on kernel and GARCH models – Forecasting

Pingback: Quantile forecast optimal combination to enhance safety stock estimation – Forecasting

Pingback: Incorporating macroeconomic leading indicators in tactical capacity planning – Forecasting

Pingback: R package: tsutils – Forecasting

Great Article!

Can we use ABC-XYZ analysis for purposes other than allocating resources in the forecasting process?

What are other actionable decisions a business can take from ABC-XYZ analysis?

Thanks 🙂

Yes, ABC is a standard operations tool, to help prioritise resources (or identify potential pains) in inventory, etc. They both play a role in some approaches on setting differential service levels across the product assortment. To my experience, it is also helpful for shaping the S&OP discussions in companies. At their heart, since they are Pareto analyses, they have appeared in various uses in operational research and management in general. Arguably some of these uses quite insightful, and at times rather misguided – my main critique being that any classification is relative and not absolute: change the assortment and the result changes.